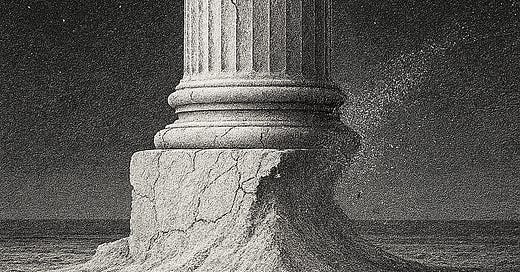

[EN] Foundations of Sand: The Replication Crisis

And why Germany's far right will soon be talking about it

TL;DR: US Vice President JD Vance tweeted about the reproducibility crisis -- and he was right. Only 36% of psychological studies can be replicated, in medicine sometimes only 11%. This costs billions and destroys trust in science. A broken incentive system rewards quantity over quality. p-Hacking becomes the norm - I experienced this myself. The problem is real. The danger: Populists like Vance use these weaknesses as weapons. Trump's new Executive Order declares reproducibility as the standard - and specifically targets DEI, climate, and health policy.

The choice is clear: Do we reform ourselves - or do we leave the field to those who want to gut science?

Cause for Concern

Last Saturday (24.5.2025) US Vice President JD Vance tweeted: "There is an extraordinary 'reproducibility crisis' in the sciences, especially in biology, where most published studies are not replicable."

This message hit me with full force. Not because he was wrong. But because he was damn well right.

Almost exactly a year ago, I sat in a meeting room and made an uncomfortable prediction to colleagues: If I were the AfD, Germany's right-wing populist party, and wanted to attack and delegitimize the German university system, I would focus on research reproducibility. This is our most vulnerable point. The place where even critics with bad intentions can hit us without appearing anti-science. Now exactly that is being done by an American politician whom most German academics despise. And we have personally delivered him the ammunition.

The same weekend it became clear that it wouldn't stop at tweets. Just one day before Vance's post, on May 23, 2025 - though I only noticed it the following week - President Trump signed the Executive Order "Restoring Gold Standard Science". In it, he commits all federal agencies to introducing new standards for transparency, reproducibility, and independence of scientific advice. What sounds like a manifesto of the Open Science movement reveals itself upon closer inspection as political instrumentalization: The order explicitly criticizes diversity measures, references "abusive" COVID guidelines from the CDC, and climate models that are supposedly too pessimistic. In sum, a pattern emerges: Real scientific weaknesses are being used to delegitimize entire research areas -- public health, environment, social justice. The replication crisis becomes a weapon.

The problem is not that populists attack scientific institutions. The problem is that they hit real weak spots in doing so. When JD Vance or the AfD here in Germany - presumably soon - talk about the "replication crisis," we can't simply shout "Anti-science!" and hope the problem disappears. Because the crisis is real.

I'm not writing this as an outsider pointing fingers at others. I'm writing it as someone who was in the middle of it and still is. As a PhD who knows the system from the inside. As a diversity practitioner who sees daily how questionable research becomes expensive policy. That's exactly why I started this Substack, namely to point out the quality problems in DEI research that undermine my field of work.

This article about the replication crisis had been planned for a long time, but was supposed to appear much later. Vance's tweet made me move it up. Because when extreme politicians begin to attack our most vulnerable points, we can no longer wait and hope that everything will somehow work out.

"Security through obscurity" no longer works. The strategy of keeping scientific problems under wraps and hoping that no one outside universities notices them has failed. The replication crisis is long public, the scandals are documented, the weak spots are known. Populists like Vance don't need insider information - they can simply refer to PubPeer, Retraction Watch, or Data Colada. The internet has torn down the walls of the ivory tower and distributes our dirty laundry in public.

We face a choice: Do we reform ourselves, or do we let politicians impose it on us who don't want to strengthen our institutions, but weaken them?

How Bad Is the Situation?

But how bad is the situation actually? Beyond years of individual cases and anecdotes: Are there hard numbers on the extent of the replication crisis?

The sobering answer was provided in recent years by large-scale replication projects. Perhaps the best known is the "Reproducibility Project: Psychology," which attempted to repeat 100 significant psychological studies. The result was shocking: Only 36% of the studies could be successfully replicated.

Similar projects in other areas confirmed the pattern. The "Many Labs" project could replicate 10 of 13 classical psychological effects across different laboratories - which was still considered a success. In experimental economics, 11 of 18 studies could be replicated.

In medicine, the situation is even more dramatic. A pharmaceutical company tried to reproduce 53 groundbreaking cancer studies - it was successful only in 6 cases, a failure rate of 89%. Even more shocking was the assessment by Richard Horton, editor-in-chief of the renowned medical journal The Lancet: "Much of the scientific literature, perhaps half, may simply be untrue. Plagued by studies with small sample sizes, tiny effects, invalid exploratory analyses, and flagrant conflicts of interest, science has taken a turn toward darkness." [own translation]

In other words: In large parts of science, up to half of all replication attempts fail. This doesn't automatically mean that the original studies were wrong - but it shows that our methods are far less robust than we thought.

The Price of the Crisis: Billion-Dollar Losses and Eroded Trust

The reproducibility crisis is not an abstract, academic problem. Its consequences are real, they are expensive, and they undermine the foundations of our knowledge society. The costs can be measured in two currencies: in billions of euros and dollars that are wasted, and in a currency that is incomparably harder to regain -- public trust.

The Economic Costs: A System Burns Money

The numbers are devastating: Conservative estimates assume that 50-90% of preclinical research is not reproducible. Pharmaceutical companies now routinely expect that only 10-25% of academic studies are replicable in their own laboratories. The result is a gigantic waste of resources. In the US alone, 28-50 billion dollars are wasted annually on irreproducible biomedical research. In Europe, the numbers are likely similarly devastating. With 129.7 billion euros in annual expenditure in Germany, we're talking about tens of billions of euros in waste per year.

A particularly devastating example is Alzheimer's research. In 2022, a Vanderbilt researcher named Matthew Schrag uncovered that a groundbreaking 2006 study on amyloid-beta proteins was possibly based on falsified images, as the magazine Science reported extensively. This study was cited over 2,300 times and influenced the direction of Alzheimer's research for 16 years. NIH funding for amyloid-related Alzheimer's research rose from practically zero in 2006 to 287 million dollars in 2021. Based on this, drugs like Aduhelm were developed, which despite weak and contradictory evidence received FDA approval. The consequences were fatal: A significant portion of patients experienced serious side effects like brain swelling and microbleeds. Several deaths are linked to the treatment, while the hoped-for cognitive improvement failed to materialize. Billions of US dollars flowed into a research direction that not only failed therapeutically but was partly based on data whose scientific integrity is now seriously questioned.

But this is only the tip of the iceberg. The indirect costs are even more dramatic: years of follow-up research built on false foundations, and drug development that pursues expensive dead ends. The industry has responded by increasingly ignoring academic research and routinely conducting its own validation studies before investing millions in development. This creates a "Valley of Death" between academic research and practical application -- a chasm that arose from lack of reproducibility.

These costs extend far beyond biomedicine. In the DEI field, I see daily how German organizations spend millions on diversity programs whose scientific foundation is shockingly thin. In the US alone, companies spend, according to reports cited by the British government, over 8 billion dollars annually on diversity training, although the evidence for their effectiveness is mixed to non-existent. Political decisions in education, business, and social policy are based on studies that may not be replicable. Every false policy recommendation costs not only money but also trust in evidence-based decision-making.

The Loss of Trust: When Science Squanders Its Credibility

The greatest damage, however, may be the loss of trust. The replication crisis undermines public trust in science precisely at the time when we need it most urgently. When people hear that "half of all studies may be wrong," why should they trust vaccination recommendations? Why take climate change research seriously? The crisis provides science skeptics and conspiracy theorists with perfect ammunition.

This was already visible during the COVID-19 pandemic, when lack of trust in science was a strong predictor of vaccine skepticism. Vaccine opponents referenced the "crisis of science" to justify their position -- and could draw on real examples of scientific failure. The shadow of Andrew Wakefield's fraudulent 1998 Lancet study, whose retraction is documented on Retraction Watch, still lies over the vaccination debate.

Every headline about falsified data or retracted studies strengthens those who claim you can't "trust the experts." This loss of trust is measurable and shows itself in the growing politicization of scientific topics. And this is exactly where the circle closes with JD Vance's tweet. When populists attack scientific institutions, we can no longer simply shout: "That's anti-science!" because they are partly right. They use real problems of science as weapons against scientific institutions. This is the perfidious thing about the situation: The criticism is justified, but the proposed "solutions" would worsen the problem, not improve it.

The Broken Machinery: Why Science Sabotages Itself

Before we can understand why the replication crisis arose, we must understand how science actually works. Not the idealistic version from university brochures, but the economic reality.

Science is a billion-dollar business, largely financed with tax money. In Germany alone, a total of 129.7 billion euros was spent on research and development in 2023 - that's more than the entire federal budget for defense. The money flows from taxpayers through ministries and funding organizations like the German Research Foundation (DFG) to universities, which use it to pay researchers, finance doctoral students, compensate subjects, and buy expensive equipment. With these funds, scientists then conduct studies, collect data and analyze it, write a manuscript, and submit it to a relevant journal.

And then the peer review system comes into play -- theoretically the comprehensive quality control of science. In practice, however, there is often a gap between this claim and reality: The system often cannot fulfill the high expectations of error-free validation and instead serves more as a critical, in-depth plausibility check by specialist colleagues.

Peer review can do many things: It can identify obvious methodological errors, uncover gaps in argumentation, and ensure that studies correspond to the current state of research. What it cannot do: uncover data falsification. When Francesca Gino (more on her later) claims to have evaluated 13,000 insurance policies when she only had 3,700, no reviewer can detect that -- unless they demand the raw data, which practically never happens. And even when studies promise that data is 'available upon request,' this is often an empty promise. A current (and still unpublished) study by Ian Hussy showed impressively that such requests often remain unsuccessful or are not answered at all. The postulated verifiability of research is thus often undermined even after publication.

Even in cases where raw data and analysis code are made available, it is not common for peer reviewers to recalculate the authors' analysis. This would also hardly be feasible, since the peer review system is based on the voluntary work of scientists who usually do this unpaid and alongside their actual research and teaching duties. Thus, the peer review system is indirectly financed with tax money, although it primarily benefits journals that are privately owned by a few, often highly profitable publishers.

Worse still: The system also invites abuse. Reviewers can force authors to cite their own work - the notorious "please cite these five works by me" comment. Peer reviewers can block competing research, reject innovative approaches, or simply indulge their personal whims.

The result: A system that rewards conformity and punishes risks. Exactly the opposite of what science needs. This creates a perverse system of incentives that rewards quantity over quality. Researchers must "publish or perish." Their career doesn't depend on whether their studies are replicable, but on how many publications they can show and in which journals they appear.

But here it gets really absurd: The research that was financed with public funds and peer-reviewed disappears behind the paywalls of profit-oriented publishers. Universities pay twice - first for conducting the research, then again for access to the results. The taxpayer finances research that they subsequently cannot read without paying again. Scientific results are therefore generally not accessible to the general public.

This creates machinery that is programmed to produce as many "significant" results as possible - regardless of whether they correspond to the truth. This is the perfect breeding ground for a replication crisis.

The Erosion of Authority: When Superstars Fall

The abstract replication crisis took on faces in recent years. And these faces belonged to the stars of behavioral research - people who gave TED Talks, wrote bestsellers, and were considered experts on honesty and ethical behavior. Their fall shows: The crisis has reached the elite of science.

Francesca Gino was a superstar. Harvard Business School, over 100 publications, one of the most cited researchers in her field. She researched dishonesty - why people lie, cheat, and behave unethically. In 2012, she published a groundbreaking study that showed that people are 10% more honest in tax forms when they sign the honesty declaration at the beginning instead of at the end. The Obama administration took notice, governments worldwide studied whether they could recoup billions in tax losses with this simple trick.

The bitter irony: A researcher who was famous for studying dishonesty was herself accused of data manipulation in exactly this honesty study, and the study has now been retracted by the journal.

In May 2025, Harvard took an unprecedented step: For the first time in over 80 years, it revoked a professor's tenure - the practically permanent lifetime appointment. The reason: research misconduct in several studies, uncovered by the Data Colada Blog by Uri Simonsohn, Leif Nelson, and Joe Simmons.

In fact, Gino's case is not an exception. It's only extraordinary because she was caught. Cases like the Dutch social psychologist Diederik Stapel, who invented dozens of studies, or the countless retractions of questionable studies show: Science has a systemic problem. The website Retractionwatch.com tries to document these cases and even maintains a "leaderboard," where journals have retracted between 48 and 220 scientific articles for authors with the most retractions.

It's often not the institutions themselves that uncover these problems. For someone with a statistical background, it's remarkable that in 80 years at Harvard University with (as of 2023 alone: 903 "tenured faculty") thousands of professors, someone was dismissed for the first time now. This suggests either an exceptionally honest culture or a high number of undiscovered cases. Instead, it's scientists like the three researchers behind Data Colada who meticulously examine studies in their spare time and find irregularities, or the microbiologist Elizabeth Bik, who has uncovered image manipulations in hundreds of studies. It's platforms like Retraction Watch that document retracted studies and have created a database to capture the status quo. It's initiatives like FORRT (Framework for Open and Reproducible Research Training) that try to better train the next generation of researchers. All of this happens largely on a voluntary basis - people who love science and want to improve it.

From the Middle: My Own Journey from Accomplice to Critic

I'm not writing this as an outsider pointing fingers at others. I'm writing it as someone who was part of the problem myself.

It was during my PhD when an experienced researcher gave me advice that haunts me to this day: "You have to have a dialogue with your data." It sounded almost poetic. What he meant was anything but that. He encouraged me to fiddle with the data until it told me what I wanted to hear, until I found a story I could publish.

He didn't want to cheat. He wanted to help me secure my career. Because collecting data for years and then finding nothing significant is practically the end for a fresh academic career. Depending on the system, no publication in a journal means no or a poorly graded PhD, no postdoc position, no professorship. He knew the system and tried to navigate me through the pitfalls.

That was the moment when I understood how normalized questionable research practices are in science. Not as conscious malice, but as "clever" research tactics passed on by well-meaning mentors to desperate junior scientists to succeed in a system maximally designed for competition:

"See if you can remove the outliers."

"Try a different statistical transformation."

"Maybe it works if you divide the groups differently."

"See if an interaction effect isn't hiding here."

Years later, when I switched to the DEI field, I encountered the problem again - only this time from the other side. Suddenly I was the one who was supposed to implement studies that others had produced with questionable methods.

I saw German organizations spend large sums on diversity training, based on studies on the effectiveness of such programs that proved to be shockingly thin upon closer inspection. I observed how concepts like microaggressions or trigger warnings or bias training were adopted into guidelines, although the underlying research was far less robust than media coverage suggested.

The perfidious thing: In the DEI field, the temptation is particularly great to accept weak evidence because the goals seem so honorable. Who wants to argue against diversity and inclusion? But this moral clarity makes research vulnerable to politically motivated interpretation.

Systemic Reasons

To understand the true causes of the crisis, we must distinguish between two problems: conscious fraud and systemically promoted "questionable research practices" (QRPs).

Cases like Diederik Stapel, who falsified datasets, or the data manipulations in the Francesca Gino case are spectacular, criminal fraud. They are the tip of the iceberg. The much larger problem that is more dangerous for the stability of science, however, lies in the gray area below: QRPs. These are not invented data, but the subtle, often not even perceived as fraud manipulation of real data through p-hacking, selective reporting, or post-hoc adjustment of hypotheses.

This is exactly where the actual systemic analysis begins. Because while conscious fraud needs no complex explanation other than criminal energy, QRPs arise directly from the pressure and structures of the scientific enterprise. Why is this so? The answer leads us to one of the most uncomfortable insights of science research: Not all sciences are equally susceptible to these problems, but all sciences have the same incentives behind them.

The Italian researcher Daniele Fanelli posed a simple but brutal question: How often do different scientific disciplines find positive results? He analyzed over 2,000 studies from various fields and discovered a devastating pattern.

Space research had the lowest rate of positive results (70.2%), while psychology and psychiatry had the highest (91.5%). The probability that a study confirms its hypothesis(es) was 5 times higher in psychology and psychiatry than in space research, 2.3 times higher in social sciences overall than in natural sciences.

Fanelli's "hierarchy hypothesis" is as elegant as it is devastating: The "softer" a science, the fewer constraints there are on conscious and unconscious researcher bias. Physicists can't simply decide that the speed of light is different after all. Psychologists have significantly more leeway in interpreting their data.

This brings us to the concept at the center of the replication crisis: "researcher degrees of freedom." A team around Simmons, Nelson, and Simonsohn identified 6 different decision points where researchers can influence their data: Which participants are excluded? Which statistical tests are used? How are variables defined and combined?

Each of these decision points is completely legitimate in itself. The problem arises when researchers make these decisions when they know or suspect how they will affect the result. It's the difference between a coin flip and looking at the coin before calling "heads" or "tails."

In particle physics, for example, these degrees of freedom are extremely narrowly defined. When researchers at CERN search for a new particle, the analysis method is often developed "blind." That means it is tested on simulated data and finalized before the scientists see the real measurement results. This prevents them from -- even unconsciously -- adjusting their analysis so that a hoped-for signal appears. In psychology, on the other hand, almost everything is interpretable: What counts as an "outlier"? How long should a reaction time be to still count as "normal"? Which demographic variables should be included?

This flexibility transforms honest scientists into involuntary p-hackers. They test a hypothesis, find p = 0.07 - "not significant." So they remove a few extreme values: p = 0.053. Still not under 0.05. Maybe a different statistical transformation? p = 0.048. Bingo! Publication secured.

The researcher doesn't think he's cheating. He thinks he's optimizing his analysis. But statistically speaking, he has increased his α-error rate from 5% to possibly 30% or higher without noticing.

This brings us to one of the most fundamental problems of modern science: the confusion of exploratory and confirmatory research.

Confirmatory vs. Exploratory Research

There are two fundamentally different ways to do science. One is exploration - sifting through data looking for interesting patterns. The other is confirmation - targeted testing of pre-formulated hypotheses. Both are legitimate and necessary. The problem arises when you mix them and pretend that exploration is confirmation.

Imagine you go to a casino and bet on red at roulette. The ball lands on black. Now you say: "Actually, I had bet on black." That's exactly what happens in science constantly - only more subtly and unconsciously.

Exploratory research is like an expedition into unknown terrain. You collect data and see what you find. Maybe you discover interesting correlations, unexpected patterns, new hypotheses. The problem: With enough variables, you always find something. The website Spurious Correlations illustrates this perfectly - there, per capita cheese consumption correlates with the number of people who get tangled in bedsheets and die (r = 0.95). Purely by chance.

Confirmatory research works the other way around. You have a specific hypothesis, determine in advance how you will test it, collect new data, and see if your prediction is correct. Only this way can you really "confirm" that you have discovered something, and even then only with strong limitations.

The problem gets even worse due to the theoretical weakness of many fields. In fields like Organizational Behavior or large parts of psychology, theories are so vague and contradictory that you can practically "justify" any result after the fact.

A classic scenario: You collect data and find that leaders with higher emotional intelligence achieve worse team performance. This doesn't fit your original hypothesis? No problem. You simply switch the theoretical justification: "As the theory of optimal challenge shows, overly empathetic leaders might underchallenge their teams..."

It gets even more absurd when peer reviewers say: "The theory doesn't convince me, take another one" - although data collection has long been completed. So you simply exchange the justification without changing a single datum. The same result is now explained by a completely different "theory."

This is not an isolated case, but everyday life in many areas of science. The theoretical arbitrariness, especially of the social sciences, makes it impossible to make real predictions or falsify theories. Everything can be explained, nothing can be refuted.

This brings us to a fundamental problem: Even when individual researchers want to be honest, the system creates incentives that can push them into the gray area of questionable practices.

The Tragedy of the Commons: Why Individual Virtue Is Not Enough

The replication crisis is a classic example of a tragedy of the commons. Everyone has an incentive to behave badly, even though the collective result is harmful to everyone.

Imagine you are an honest junior researcher. You conduct a clean, well-powered study, preregister your hypotheses, and find... nothing: p = 0.23. No significant result. Your colleague in the neighboring lab does the same but tries different analyses until he reaches p = 0.047. He publishes in a good journal, you don't.

Who gets the postdoc? Who gets the professorship? Who gets the research funding?

The system becomes even more perverse through "publication bias": Journals accept almost exclusively "positive" results. Null findings - no matter how cleanly conducted, no matter how important for the research field - end up in the file drawer. A failed replication attempt is "uninteresting" to editors, even if it shows that a widespread finding is false.

While there are now important open-access journals like PLOS ONE that publish research based on methodological solidity and independent of the result (which includes null findings and replications), or more specialized journals like the Journal of Null Results that explicitly promote negative results, and formats like 'Registered Reports' where studies are accepted for publication before data collection, purely based on the quality of the proposal. But this is a vanishing minority in the scientific enterprise.

The system systematically rewards those who apply questionable research practices and punishes those who are honest. Being rigorous means fewer publications, slower careers, less recognition.

In Germany, this pressure is particularly extreme. There is practically only one permanent position in the scientific enterprise: the professorship. Everything else - from postdoc to junior professorship - is temporary. The Academic Fixed-Term Contract Act ensures that researchers must either get a professorship or leave the system after a maximum of 12 years (6 years before, 6 years after the PhD).

The numbers are brutal: Only about 7% of all PhD holders ever become professors. This means 93% of all junior scientists fight for years in a system that will eventually spit them out. Under this existential pressure, every publication becomes a matter of survival.

When you know you only have a few years to collect enough high-profile publications to maybe snag one of the rare professorships - what do you do with an honestly conducted experiment that shows null results? You can't afford empty years.

The perfidious thing: Everyone knows it's like this. But everyone thinks: "If I'm the only honest one while everyone else cheats, I'm only destroying my own career without changing the system." So everyone goes along, hoping that everyone else will stop sometime.

But it would be too simple to only blame individual researchers. The real culprits sit in university administrations, appointment committees, research funding organizations, and publishers who have created and maintain these perverse incentives.

Universities preach excellence and integrity, but their decisions speak a different language. Whom do they hire? The candidate with 50 publications or the one with 10 clean, replicated studies full of null findings? Whom do they promote? The professor who generates media attention, or the one who does methodologically solid but "boring" basic research?

Everyone who has ever sat on an appointment committee knows the answer. Quantity counts, not quality. And when quality is spoken of, it is often derived from the impact factor or ranking of the journal, not from the quality of the individual article.

Universities love "rockstar" scientists like Francesca Gino - until the bubble bursts. They profit from the TED Talks, media appearances, consulting contracts with Fortune 500 companies. They ignore methodological doubts as long as the publicity is right. Harvard overlooked Gino's questionable working methods for years because she brought the university prestige and attention.

Voluntary Self-Commitment to the Rescue?

The strongest counter-movement to the described system failures runs under the banner of the "Open Science" movement. Its goal is to return science to its core values through principles like radical transparency (Open Data, Open Code), free access to knowledge (Open Access), and verifiability (preregistration).

A crucial lever that this movement has identified is the reform of outdated research assessment. This is exactly where the most ambitious initiatives start: The Coalition for Advancing Research Assessment (COARA) is the most ambitious of them. Over 700 organizations have committed to reforming their evaluation criteria - away from pure publication metrics, toward qualitative assessment and recognition of diverse research contributions.

COARA's 10 core commitments sound promising: recognition of the diversity of research contributions, primarily qualitative assessment through peer review, abolition of inappropriate journal metrics like the impact factor. Similarly, the Declaration on Research Assessment (DORA) tries to move universities away from fixation on journal impact factors.

The problem: The implementation gap is enormous. COARA explicitly respects the "autonomy of organizations" - which in practice means that each university can decide for itself how seriously it takes the reforms.

But the real problem lies deeper: The decision-making processes in universities are firmly in the hands of those who were and are successful in the old system. Professors dominate appointment committees, faculty councils, and senates. These are people who made their careers exactly in the system that is now supposed to be reformed. They collected their publications, chased impact factors, and played quantitative metrics - and were successful with it.

Why should they change a system that brought them to the top? If they admit that publication-based evaluation is problematic, they implicitly question their own career.

The high good of 'freedom of research and teaching' from the Basic Law (the German constitution) often serves as a rhetorical shield for these forces of inertia. Any attempt to introduce binding quality standards or more transparent appointment procedures is fended off as an attack on the autonomy of science. In doing so, the original meaning of this fundamental right is perverted: It was created to protect research from political censorship, not to free it from scientific due diligence. Freedom of research is not synonymous with freedom from any accountability. Because those who research on behalf of and at the expense of society, equipped with this freedom and the associated privileges, also owe it in return the observance of appropriate research standards.

Most universities, however, treat such initiatives as PR exercises. They sign the declarations, hold a few symposiums, and then continue as before. When the next "rockstar" candidate with 100 publications comes up for appointment, the lofty words about "qualitative assessment" are quickly forgotten and no one asks whether the findings are replicable.

This is why voluntary reforms fail. Organizations only change under pressure - external pressure. Pharmaceutical companies didn't improve their research practices out of insight, but because regulators forced them to. Banks didn't voluntarily tighten their risk management systems, but after the financial crisis.

Universities need similar pressure. Research funding organizations must change their criteria. Journals must reject studies without preregistration, or evaluate them much more critically. Accreditation agencies should in future evaluate the quality of research practices, not just the number of publications. But this pressure comes too slowly and too weakly. While scientists are still discussing whether and how to reform themselves, politicians like JD Vance are already using the system's weak spots for their purposes.

Solutions That Actually Work

The good news: The solutions to the replication crisis are known and there are many motivated, excellent actors who are advancing them. The bad news: They require systematic changes that many in science don't want.

Methodological Approaches

Preregistration of studies is the most important lever. Researchers must publicly register their hypotheses, methods, and analysis plans before collecting data. This makes p-hacking impossible - you can't retroactively paint the target around the arrow when the target is already fixed. Platforms like OSF (Open Science Framework) make this easier today than ever before.

Registered Reports go even further: Journals evaluate and accept studies before data collection, based on research question and methods. The result doesn't matter - positive and negative findings are published. This eliminates publication bias at one stroke. As the study by Scheel et al. (2021) shows, the number of confirmed hypotheses decreases significantly when studies are registered, simply because publication is independent of the study's outcome.

Open Data and Open Code mean that other researchers can check the analyses. The elaborate uncovering of data manipulations in Francesca Gino's studies by Data Colada was only possible because the researchers gained access to some datasets. However, if her raw data had been standardly and transparently accessible to everyone from the beginning in the sense of comprehensive Open Data practices, the manipulations would potentially have been discovered much earlier or by a broader scientific community. Transparency is the natural enemy of fraud.

But methodological considerations alone are not enough. The incentive system must change - and that means universities, journals, and research funding must rethink.

Structural Approaches

Universities must reform their appointment criteria. Instead of using long publication lists as the primary measure, they should focus on the in-depth substantive evaluation of fewer, for example the 3-5 most important and meaningful research achievements of the candidates. This 'best-of' approach should explicitly include the possibility of also valuing qualitatively outstanding but perhaps still unpublished manuscripts, preprints, important datasets, or developed software as central proof of achievement. The evaluation must be primarily qualitative and content-related, not quantitative.

Journals must prioritize replications and null findings. The Journal of Null Results and initiatives like PLOS ONE's focus on methodological quality show how it's done. Every major journal should be required to reserve a certain percentage of its issues for replication studies.

Research funding must finance replications. Although the German Research Foundation (DFG) is slowly opening up to the topic, there are still no established, broadly based funding lines that make it easy to finance pure replication studies. Systematic replications thus remain the exception in the funding system, not the rule.

COARA commitments must become binding. The beautiful declarations about qualitative assessment must be translated into concrete personnel decisions. Universities that have signed COARA but continue to promote only based on publications should be publicly exposed. Since this is a commons problem, the legislature may also be called upon to set the necessary framework conditions.

The German Academic Fixed-Term Contract Act must be reformed. As long as only 7% of all PhDs receive a professorship and all others must leave the system after a maximum of 12 years, the pressure for publication chasing remains existential. Germany needs more permanent mid-level positions for researchers who don't want to or can't become professors. Other countries show that it's possible: In the US, UK, or France, there are significantly more permanent research positions below the professorship level.

Appointment procedures must become transparent. German universities must disclose according to which criteria they actually appoint - not what they claim in job advertisements. If quality is to come before quantity, this must be traceable in the protocols of appointment committees. In the sense of professionalization, the freedom of faculties to design these procedures at will must be restricted.

Training the next generation is crucial: All doctoral students should take mandatory courses in research ethics, statistical methods, and Open Science. Initiatives like FORRT show what such programs can look like. Additionally, replications should be part of every dissertation.

International coordination is indispensable. Science is global, but reforms are fragmented. The EU should make COARA principles a condition for Horizon Europe funding. Universities that give lip service to reform but change nothing should be excluded from EU funds.

Publishers must be held accountable. Elsevier, Springer Nature, and other scientific publishers earn billions from publicly funded research. They could decide tomorrow to only publish preregistered studies or link all articles with Open Data. Regulatory pressure is needed here.

Whistleblowers must be protected. People like Matthew Schrag, who uncovered fraud in Alzheimer's research and even found problems in his own mentor's work, risk their careers. Institutional safe spaces and financial security are needed for researchers who report scientific misconduct.

The replication crisis is therefore not just an internal problem of science -- it has become a political target. With the Executive Order of May 23, the US government is getting serious: Scientific standards are now being redefined by decree. And although many demands are superficially welcome, such as more data accessibility or methodological transparency, the context shows a clear direction. It's not about better science, but about different science. One that disguises political intervention by dressing itself in the garb of "neutral standards."

At the Turning Point: Reform as a Command of Self-Preservation

The list of necessary reforms is long and their implementation takes time - time we may not have. Because while science is still debating the "how" of reforms, the crisis has long become a political weapon. Populists like JD Vance in the US or the AfD in Germany are not waiting for our self-healing. They are already using the real, well-documented weak spots of the system today as leverage to undermine the credibility of science itself.

It is crucial to distinguish between two types of criticism: constructive and destructive. Constructive critics like the researchers behind Data Colada love science and want to save it through transparency and rigorous methods. Destructive critics use the same examples -- a case like Francesca Gino -- to achieve a different goal: the delegitimization of expertise and evidence-based policy. Their message is not: "Make science better!" but: "Don't trust the experts!"

This is exactly why defensive denial of the problems is the worst of all strategies. The first and most uncomfortable truth is: The critics are right on the matter. The crisis is real. When superstars like Gino fall, institutions like Harvard that celebrated them for years because of their prestige are complicit. The problems are, as my own journey from accomplice to critic shows, not regrettable isolated cases, but the logical consequence of a system that systematically rewards quantity over quality in a "tragedy of the commons."

From this insight follows inevitably: Appeals to individual virtue are not enough. Collective, systemic solutions are needed. The incentives in appointment procedures, at research funding organizations, and in the publication system must be consistently changed. The beautiful words of initiatives like COARA must be put into action and the German Academic Fixed-Term Contract Act must be reformed to reduce existential pressure.

These reforms are no longer just scientifically necessary, they are politically vital for survival. Because in the end there is a simple, brutal choice: Do we reform ourselves, or do we let politicians impose it on us who don't want to strengthen our institutions, but weaken them?

The crisis, dangerous as it is, also offers a historic opportunity: to reform science as it should always have been. More transparent, more robust, more honest. A science that is committed to truth, not career pressure. A science that puts quality over quantity. A science that is strong enough to survive political instrumentalization. The US has already made this choice in part -- and it shows how thin the line is between scientific quality improvement and ideological purging. The replication crisis is real. But the answer to it decides whether science emerges strengthened or hollowed out.

The question is only: Do we have the courage to fight this battle before others decide it for us?